苹果传闻已久的智能眼镜Apple Glasses终于有可信的时间表。根据彭博社的报道,苹果为智能眼镜开发的专用芯片已进入关键阶段。这款基于 Apple Watch 芯片架构的定制化 SoC,通过移除图形渲染等冗余模块,将能效比提升至 iPhone 芯片的 1/3。其核心任务是处理多摄像头数据 —— 苹果计划在眼镜上搭载至少 4 枚传感器,包括两枚用于空间定位的 ToF 镜头和两枚环境光传感器,这使得设备能实时构建 3D 场景地图,精度达到毫米级。

1. Estimated release time

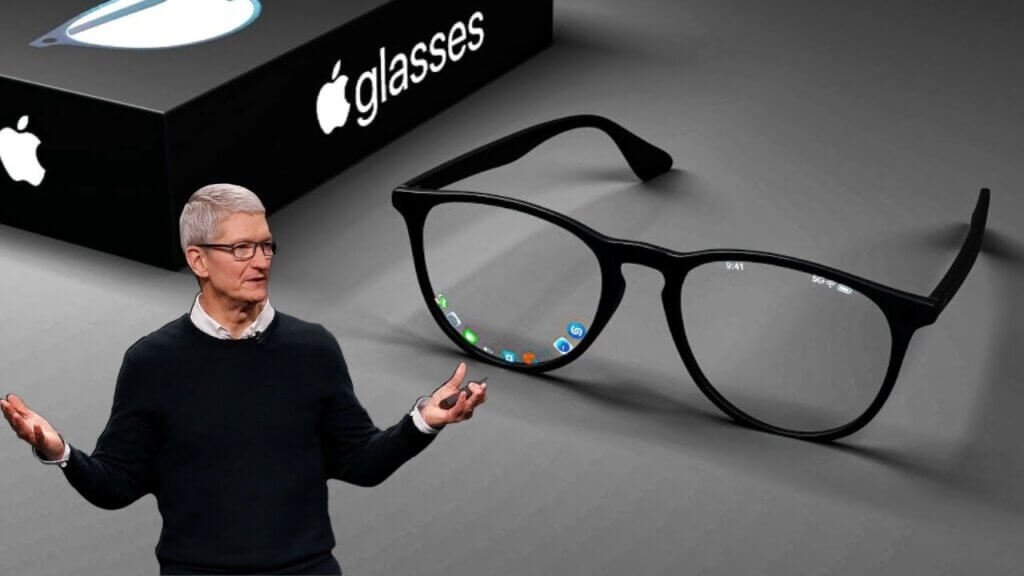

According to cross-verification by authoritative media such as Bloomberg and The Verge, Apple is advancing the commercialization process of Apple Glasses with a "two-step" strategy: the non-AR version (codenamed N401) is expected to debut first in Q4 2026, directly benchmarking Meta Ray-Ban glasses at a price of US 3329, focusing on lightweight (Within 48 grams) and basic functions such as taking pictures and real-time translation. The AR version (N301) is planned to be launched in the second half of 2027. It adopts a split design. The external computing power module realizes 5 millisecond low-latency data transmission through millimeter wave technology, and the target weight is controlled at 32 grams. This timetable is highly consistent with TSMC's 3nm chip mass production plan (Q3 trial production in 2026) to ensure the stability of core hardware supply.

2. Product form: the evolution from "fashion accessories" to "computing hub"

1. Non-AR version: low-key functionalism

This version uses titanium alloy hinges and ultra-light carbon fiber frames, supports prescription lens replacement, and is designed to continue Apple's "Technology + fashion" gene. The goal is to become a daily wearable device like Apple Watch. The core configuration includes:

- Multi-sensor collaboration: at least 4 cameras (including two ToF lenses) can build a 3D scene map with millimeter-level accuracy in real time, supporting spatial positioning and adaptive adjustment of ambient light.

- Energy efficiency optimization chip: A customized SoC based on the Apple Watch architecture, removing the graphics rendering module, has an energy efficiency ratio of 1/3 that of the iPhone chip, ensuring a single battery life of 8 hours.

- Basic interactive functions: integrate Siri voice assistant, real-time translation, and health monitoring (such as heart rate and blood oxygen), and synchronize data to iPhone and Apple Watch.

2. AR version: A subversive attempt at split design

Apple adopts a split solution of "glasses body + external computing power module":

- Display technology breakthrough: The dual 1080P Micro LED screens provided by the joint venture between Sony and TSMC have an outdoor brightness of 5000 nits, support dynamic light transmittance adjustment, and users can seamlessly switch between augmented reality and the real world.

- Interactive revolution: The "photon glove" accessories in the test realize millimeter-level precision control through finger movements, and with bone conduction + directional sound field technology, private calls can be realized in public places.

- Health monitoring innovation: The non-invasive blood glucose monitoring module jointly developed with Rockley Photonics detects blood glucose levels in real time through tear analysis. If it is certified by the FDA, it will completely change the way diabetes is managed.

3. Market challenge: Finding a balance between Meta and technology

Although Apple has an advantage in hardware and ecology, the market competition cannot be underestimated. Meta's Ray-Ban smart glasses have sold more than 2 million units in total, and their success stems from precise positioning-lightweight, low price and social attributes. Apple needs to find a balance between technological leadership and market acceptance: for example, the non-AR version needs to surpass Meta in basic functions such as camera quality and voice interaction response speed, while the AR version needs to solve the problem of insufficient application ecology.

The ecological construction of developers has been quietly launched. Apple secretly funded AR developers such as Niantic for more than 200 million US dollars, requiring that the first applications must support the spatial persistence function, that is, the AR content created by users can be stored in the physical space for a long time. This strategy aims to replicate the “killer application” effect of the early iPhone and lock in user habits through early developers.

4. Future outlook: the core fulcrum of the ”Spatial Computing" strategy

For Apple, Apple Glasses is not only a product, but also the core fulcrum of its “spatial computing” strategy. Cook has repeatedly emphasized in internal meetings that AR technology will change people's lifestyles like the iPhone, and Apple Glasses is the carrier to achieve this goal. From the technical path point of view, Apple is building a full–scene perception network through the combination of “glasses–earphones-watches”, which may be closer to consumer needs than Meta's “meta-universe” concept.

Industry observers pointed out that the launch of Apple Glasses will accelerate the differentiation of the smart glasses market: the high-end market focuses on AR experience, the mid-end market focuses on lightweight AI functions, and the low-end market focuses on basic wearable devices. If Apple can launch the AR version on time in 2027, it is very likely to replicate the iPhone's dominance in the smartphone market-but only if it solves key issues such as display technology, application ecology, and privacy protection.

In this wearable device revolution, Apple Glasses may not be the first product to eat crabs, but with its strong chip research and development capabilities, ecological integration advantages and the ultimate pursuit of user experience, it is likely to become the “terminator” that redefines the rules of the industry. As Cook said: ”In the next ten years, we will see the combination of AI and wearable devices revolutionize the way humans interact with the digital world. ”And Apple Glasses may be the key to this future.

Comment List (0):

Load More Comments Loading. . .